Bringing Alzheimer’s Detection into the Digital Age

Quick Links

With a hefty shove from COVID-19, dementia scientists have accelerated their efforts to swap in-person cognitive testing for online versions. At the Clinical Trials on Alzheimer's Disease conference held online and in Boston November 9-12, advances in two areas were on display: moving existing assessments online, and creating wholly new digital tests that operate as smartphone apps. Using frequent remote check-ins, the aim is to detect mild cognitive impairment and even preclinical cognitive changes, without people having to come to a clinic.

- Researchers create new digital cognitive tests.

- Digital tests largely hold up to in-person versions.

- More data needed to validate these smartphone assessments.

Researchers presented data on a range of digital tests. The electronic clinical dementia rating (CDR) is the first attempt to move this established workhorse for staging dementia online. Other tests were new. The German company neotiv claimed that its smartphone app measures episodic memory and identifies early cognitive decline nearly as well as an in-clinic assessment. Likewise, a test developed by the Canadian company Cogniciti correlated well with the Montreal Cognitive Assessment. Roche reported preliminary-use data on its smartphone app, which combines cognitive testing with lifestyle surveys and phone-use monitoring to paint a fuller picture of cognition. Two other tests, the Computerized Cognitive Composite (C3) and the Boston Remote Assessment for Neurocognitive Health (BRANCH), are being developed at Brigham and Women’s Hospital, Boston. C3 predicted cognitive decline over a year; preliminary data on BRANCH suggest that it tracks how well participants learned and remembered tasks. Both might be used in prescreening for clinical trials.

Going Digital

Yan Li of Washington University, St. Louis, and colleagues are bringing the CDR online. During this evaluation, an experienced clinician asks participants and, separately, their primary-care partners, questions to evaluate three cognitive and three functional domains. This requires a clinic visit typically lasting over 90 minutes.

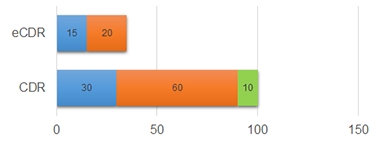

To create an electronic version, the scientists chose questions from the CDR that easily could be adapted for automatic scoring. They kept verbatim those that required yes/no responses, while converting open-ended questions to multiple-choice when possible. In all, the eCDR has 47 questions for participants and 66 for informants, which can be completed in half and one-third the time of the in-person versions, respectively (see image below). Li previously reported that such a shortened version of the CDR retained much of the traditional one's accuracy and reliability (Li et al., 2021).

Faster Online. The in-person CDR takes 30, 60, and 10 minutes on average for the participant (blue), partner (orange), and clinician (green) to complete; the electronic CDR takes a fraction of the time. [Courtesy of Yan Li, Washington University, St. Louis.]

Next, Li plans to compare the eCDR directly to the CDR by administering both to 700 study volunteers who either are cognitively normal or have MCI, and their partners at four U.S. clinics. So far, 204 pairs have joined this four-year study, in which they will take the CDR in the clinic annually and the eCDR on their computer at home every six months. Participants and partners are told to take their portions separately. Li acknowledged that there is no way to verify the participant gets no help.

At CTAD, Li showed preliminary results of eight people with MCI and 39 controls from the University of California, San Francisco, comparing 49 eCDR responses to the corresponding answers from the CDR. For 35 of them, the correlation was tight, 11 boasting complete concordance. For the other 14 responses, disagreements between the eCDR and CDR might be due at least partly to technicalities, Li said. All those disagreements involved written responses that require the participant to agree with his or her partner to be scored as correct. Other questions revealed the difficulty of capturing a clinician's discretion. For example, for the question “Where were you born?,” a clinician might mark a participant's mere misspelling of the right city as correct, whereas the eCDR would label the response as incorrect. Importantly, all but one of the 14 most discordant answers were related to memory, rendering the correlation between the digital and tangible tests of this important domain the weakest (see image below).

All told, the eCDR correlated with CDR scores with an area under the curve of 0.86—the AUC being a statistical measure of correlation, with 1.0 being a perfect match.

CDR vs eCDR. Agreement between domain scores on the CDR and eCDR. [Courtesy of Yan Li, Washington University, St. Louis.]

The performance of the eCDRs’ memory domain is a problem Li and colleagues hope to improve by testing different types of questions. Randall Bateman, also at Wash U, wondered if probing subjective memory in the eCDR could do the trick. “Capturing subjective impression of an individual’s change over time is such an important part of what makes the CDR strongly predictive,” he said. Thus far, Li has excluded questions prompting a subjective response because the automatic scoring algorithm cannot analyze open-ended responses, but she hopes artificial intelligence may change that. Natural-language processing algorithms may be able to identify key words or phrases in responses and assess their quality and accuracy.

Eric Siemers of Siemers Integration LLC likes the concept of an electronic CDR, because it might overcome the limitations of in-person sessions. "I think an eCDR could eliminate some of the subjectivity that the CDR has, which is why you need so much training to be able to give it effectively,” he told Alzforum.

What About New Tests?

Emrah Düzel of the German Center for Neurodegenerative Diseases (DZNE), Magdeburg, and colleagues at neotiv, a company he co-founded, are developing a smartphone app that probes episodic memory. It uses three tasks (see image below). The Mnemonic Discrimination for Objects and Scenes (MDT-OS) asks participants to discern if and how two pictures of a three-dimensional room are different. In the Object-in-Room Recall (ORR), the test taker sees two objects in a three-dimensional room and must identify them and where they were immediately, and again 30 minutes later. Complex Scene Recognition (CSR) involves identifying pictures as indoor or outdoor scenes and recalling after an hour which ones had been shown already. Each test takes 15 minutes.

Memory Triad. The neotiv app measures episodic memory using three tests. [Courtesy of Berron et al., medRxiv, 2021.]

At last year’s CTAD, Düzel had reported that ORR scores correlated with in-clinic neuropsychological test scores in 58 participants in the DZNE Longitudinal Cognitive Impairment and Dementia Study, a.k.a. DELCODE. Spanning healthy to AD, these volunteers have undergone extensive in-clinic cognitive testing, which makes them ideal candidates to evaluate a smartphone test. David Berron of DZNE, a neotiv co-founder, had said that ORR scores tracked with tau as per CSF and PET (Nov 2020 conference news).

This year, Düzel presented data from 102 DELCODE participants, including 25 cognitively normal people, seven cognitively normal first-degree relatives of people with AD, 48 people with subjective cognitive decline (SCD), and 22 with MCI as deemed by the CERAD neuropsychological test batter (Berron et al., 2021). The researchers compared a composite of their three tests against the parts of the Preclinical Alzheimer’s Cognitive Composite 5 that test episodic memory. Baseline PACC5 scores classified 29 volunteers as cognitively impaired, 73 as normal.

Participants downloaded the app onto a smartphone or tablet, and were asked to take one of the tests every two weeks for a year. After three cycles, they would have taken all three, which would generate a composite score. The study is ongoing. At the time of analysis, 87 participants had at least two composite scores. These correlated with baseline PACC5 scores and distinguished PACC5-defined normal from cognitively impaired people with an AUC of 0.9, a sensitivity of 83 and specificity of 74 percent (see image below).

Track with PACC. The neotiv composite correlated with scores from people the PACC5 had deemed cognitively impaired (red) or unimpaired (green). Dashed line denotes the app’s chosen cut-off; negative values mean worse cognition. [Courtesy of Berron et al., medRxiv, 2021.]

The scientists are monitoring how annual cognitive decline measured by their app holds up to PACC5 measurements in DELCODE. “PACC5 is sensitive to longitudinal change, and our digital tests have strong retest reliability, which are key to detecting decline over time,” Berron told Alzforum.

The Toronto-based company Cogniciti also develops digital tests. In its own effort to match results to established clinical testing, Theone Paterson of the University of Victoria, Canada, reported that portions of Cogniciti's cognitive test correlated well with in-clinic Montreal Cognitive Assessment scores in 40 cognitively normal adults and 51 with amnestic MCI. The digital face-name association and spatial working memory tasks predicted aMCI as accurately as did the MoCA, with AUCs of 0.76 and 0.71, respectively, Paterson reported at CTAD.

And Now for Preclinical Alzheimer's

Being more convenient than in-clinic tests, digital tests can be done more often. Kate Papp, Roos Jutten, and colleagues at Brigham and Women's Hospital, Boston, want to know if monthly checks can suss out subtle cognitive decline that yearly tests would miss. Papp asked 114 cognitively healthy volunteers from the Harvard Aging Brain Study to take the tablet-based Computerized Cognitive Composite (C3), which is a composite of the Cogstate brief battery, face-name associations, and an object-recall task. HABS volunteers did this once a month for a year (Aug 2019 conference news). They had amyloid and tau PET scans and in-clinic PACC5 testing at baseline and a year later.

This study's news had to do with practice effects, a known and nettlesome phenomenon in cognitive testing. All participants improved over the first three months of testing as they became familiar with the tasks, but these practice effects were smaller in people with high amyloid and tau burdens and in those whose cognition slipped faster on the PACC5. In fact, less improvement over the first three months on the C3 predicted who would decline by more than a 0.10 standard deviation on the PACC5 with an AUC of 0.91 (see image below).

Can't Outpractice the C3. Changes in C3 score over the first three months (blue line) predicted rapid decline on PACC5 better than did baseline C3 scores (green) or PACC5 scores themselves (orange). [Courtesy of Roos Jutten, Brigham and Women’s.]

Not wanting to wait months for a cognitive gap to emerge, Papp and Daniel Soberanes of Brigham and Women’s turned to daily smartphone-based testing. For one week, 101 cognitively normal HABS participants 65 and older took the BRANCH test, which includes face-name association, grocery item and price recall, and digit-symbol pairing tasks (Papp et al., 2021). Here, too, all participants completed the tasks more accurately as the week went by. However, older people and those with worse baseline PACC5 scores learned less well than did younger people and those with better PACC5 scores. “Early analysis of biomarker data has also shown learning-curve differences based on amyloid status, which we are excited to explore further,” Soberanes wrote via email. The neuropsychologists believe these subtle practice effects over the short term could flag participants who have subtle cognitive decline for early prevention studies.

More Than Testing

Beyond dropping pen-and-paper for the screen, some scientists are creating new assessments that encompass broader measures, such as a person's sleep quality, social engagement, or mood. Foteini Orfaniotou of Roche, Basel, Switzerland, showed preliminary results of a proof-of-concept study. Roche's app combines cognitive and motor-function tests with lifestyle surveys completed on a smartphone the company gives to volunteers (see image below). Orfaniotou said the surveys add insight into why a participant's performance may fluctuate from day to day.

Loads of Data. A Roche app measures how lifestyle factors (green) affect cognitive and functional activities (orange). Passive monitoring lets scientists track a person's engagement with his or her smartphone and his or her physical activity patterns (blue). [Courtesy of Foteini Orfaniotou, Roche.]

Aiming to study 120 volunteers eventually, the researchers thus far have enrolled 10 cognitively normal people, 51 with SCD, and 26 with early AD from three clinics in the U.S. and two in Spain. A complete data readout is planned for the first half of 2022.

Participants are assigned seven daily tasks/surveys for a month. Most had no problem using the app; two, who had early AD, did not finish the study due to technical problems or anxiety. Regardless of cognitive status, all completed at least one activity on 96 percent of the days. DZNE's Berron was glad to see this adherence and thought it boded well for digital tests. “I’m optimistic that we can implement [these tests] long-term, and in bigger groups, especially if the assessments are less frequent,” he said.

Remote Digital Tech as a Therapeutic?

In the COVID era, younger and middle-aged adults quickly turned to technology to keep their social connections despite physical distance. Older people who have been slower to adapt find themselves more socially isolated, upping their dementia risk (Aug 2017 conference news; May 2019 news). Could technology actually slow dementia, not just track it?

Hiroko Dodge at the Oregon Health & Science University, Portland, thinks so. Her prior pilot study found that 30-minute daily video chats for six weeks improved language-based executive function in cognitively normal 80-year-olds (Dodge et al., 2015). At CTAD, Dodge showed early results from the larger Internet-based Conversational Engagement Clinical Trial. In I-CONECT, 86 cognitively normal people 75 and older, and 100 age-matched people with MCI, signed up for four 30-minute structured video chats per week for six months, then two per week for another six months (Yu et al., 2021). Trained interviewers prompted conversation with pictures and asked participants to discuss related memories (see image below).

Chatting for Cognition. Through a simple interface on a provided laptop (left), older adults video-chatted with trained interviewers who prompted conversation with pictures (right). [Courtesy of Hiroko Dodge, Oregon Health & Science University.]

Among 31 people with MCI whose six-month assessment was available at the time of analysis, those who video-chatted maintained their baseline MoCA scores, while those who received weekly phone check-ins as a control declined. The researchers are now analyzing one-year follow-up data and other outcome measures, including changes in language-based executive function and speech characteristics. Among 25 cognitively normal volunteers, those who video-chatted improved in category fluency, a sign of better executive function.

Dodge thinks remote studies, such as I-CONECT, should combine digital cognitive tests with digital biomarkers, and analysis of speech patterns on the I-CONECT recorded video-chats is ongoing. “Identifying early decline in cognition by monitoring speech changes would be wonderful,” Dodge told Alzforum. (See Part 8 of this series).

Home or Clinic, or Home and Clinic?

Will any of this ever replace in-clinic tests? Alexandra Atkins, VeraSci, Durham, North Carolina, and colleagues think not. They asked 20 older adults with SCD and 41 age-matched normal controls to take the tablet-based Brief Assessment of Cognition (BAC), which includes symbol coding, verbal memory, visuospatial working memory, and verbal fluency tasks. The volunteers took the tests both in the clinic and at home. Some controls did unexpectedly better on verbal memory test at home, while all participants performed similarly on the other three tasks regardless of where they took them. Atkins did not speculate on why people did better at home.

This was but a small snapshot of the BAC, of course, which speaks to a limitation of digital cognitive assessments thus far—too little data, especially of the longitudinal sort. Rhoda Au, Boston University, noted that much of the current work is still trying to tease out patterns. “To get to digital biomarkers, we will have to invest as much effort as we have put into fluid markers,” she wrote to Alzforum.

Li noted that most studies these days are proof-of-concept to get a sense of what might be feasible and reliable. “Validation in a broader population is still needed to evaluate test performance,” she wrote. Sandra Weintraub, Northwestern University, Chicago, agreed. “Although a worthwhile effort, much work remains to be done to introduce these screens into practice,” she wrote.—Chelsea Weidman Burke

References

News Citations

- Learning Troubles Spied by Smartphone Track with Biomarkers

- Technology Brings Dementia Detection to the Home

- Lancet Commission Claims a Third of Dementia Cases Are Preventable

- WHO Weighs in With Guidelines for Preventing Dementia

- The Rain in Spain: Move Over Higgins, AI Spots Speech Patterns

Paper Citations

- Li Y, Xiong C, Aschenbrenner AJ, Chang CH, Weiner MW, Nosheny RL, Mungas D, Bateman RJ, Hassenstab J, Moulder KL, Morris JC. Item response theory analysis of the Clinical Dementia Rating. Alzheimers Dement. 2021 Mar;17(3):534-542. Epub 2020 Nov 20 PubMed.

- Berron D, Glanz W, Billette OV, Grande X, Güsten J, Hempen I, Naveed MH, Butryn M, Spottke A, Buerger K, Perneczky R, Schneider A, Teipel S, Wiltfang J, Wagner M, Jessen F, Düzel E, the DELCODE Consortium. A Remote Digital Memory Composite to Detect Cognitive Impairment in Memory Clinic Samples in Unsupervised Settings using Mobile Devices. medRxiv November 14, 2021. medRxiv.

- Papp KV, Samaroo A, Chou HC, Buckley R, Schneider OR, Hsieh S, Soberanes D, Quiroz Y, Properzi M, Schultz A, García-Magariño I, Marshall GA, Burke JG, Kumar R, Snyder N, Johnson K, Rentz DM, Sperling RA, Amariglio RE. Unsupervised mobile cognitive testing for use in preclinical Alzheimer's disease. Alzheimers Dement (Amst). 2021;13(1):e12243. Epub 2021 Sep 30 PubMed.

- Dodge HH, Zhu J, Mattek N, Bowman M, Ybarra O, Wild K, Loewenstein DA, Kaye JA. Web-enabled Conversational Interactions as a Means to Improve Cognitive Functions: Results of a 6-Week Randomized Controlled Trial. Alzheimers Dement (N Y). 2015 May;1(1):1-12. PubMed.

- Yu K, Wild K, Potempa K, Hampstead BM, Lichtenberg PA, Struble LM, Pruitt P, Alfaro EL, Lindsley J, MacDonald M, Kaye JA, Silbert LC, Dodge HH. The Internet-Based Conversational Engagement Clinical Trial (I-CONECT) in Socially Isolated Adults 75+ Years Old: Randomized Controlled Trial Protocol and COVID-19 Related Study Modifications. Front Digit Health. 2021;3:714813. Epub 2021 Aug 25 PubMed.

External Citations

Further Reading

No Available Further Reading

Annotate

To make an annotation you must Login or Register.

Comments

No Available Comments

Make a Comment

To make a comment you must login or register.